Presentations 1 – Saturday 12th Dec. 2020:

followed by Zoom Webinar Q&A:

Abstracts

Mick Grierson, Nick Collins, Thor Magnusson, Chris Kiefer – Artificially Intelligent Audiovisualisations

Contemporary deep learning techniques are contributing new methods for creating and interacting with audiovisuals. Generative models can be trained to create both sound and video, and methods exist for manipulating these models creatively, offline and in realtime. How can models in these two domains be brought together meaningfully? We discuss the potential of AI models as creative materials for audiovisual composition, focusing on questions around cross-domain mapping, multisensory integration, realtime interactivity and state-of-the-art technologies.

Mick Grierson is Research Leader, UAL Creative Computing Institute. His research explores new approaches to the creation of sounds, images, video and interactions through signal processing, machine learning and information retrieval techniques. His research has been widely used by world leading production companies, tech start-ups and artists including Sigur Ros, Christian Marclay, Martin Creed, Massive Attack, Arca and others. He is Principal Investigator on the £1million Artificial Intelligence project MIMIC.

Biographies:

Nick Collins is a Professor in the Durham University Music Department with strong interests in artificial intelligence techniques applied within music, the computer and programming languages as musical instruments, and the history and practice of electronic music. He has performed as composer-programmer-pianist and codiscian, from algoraves to electronic chamber music. Many research papers and much code and music are available from www.composerprogrammer.com

Nick Collins is a Professor in the Durham University Music Department with strong interests in artificial intelligence techniques applied within music, the computer and programming languages as musical instruments, and the history and practice of electronic music. He has performed as composer-programmer-pianist and codiscian, from algoraves to electronic chamber music. Many research papers and much code and music are available from www.composerprogrammer.com

Thor Magnusson is a Professor of Future Music at the University of Sussex. His work focuses on the impact digital technologies have on musical creativity and practice, explored through software development, composition and performance. Thor’s research is underpinned by the philosophy of technology and cognitive science, exploring issues of embodiment and compositional constraints in digital musical systems.

Chris Kiefer is a computer-musician, musical instrument designer and Lecturer in Music Technology at the University of Sussex. He performs with custom-made instruments; including an augmented self-resonating cello as half of improv-duo Feedback Cell, and with feedback-drone-quartet ‘Brain Dead Ensemble’. His research specialises in musician-computer interaction, physical computing, machine learning and complex systems.

Eleni-Ira Panourgia – Web-canvas: Creating an online interactive process between sound and image

This paper will discuss the interactive processes between sounds and images used in my piece Web-canvas. The work centres on the simultaneous transformation of image and sound through participation. Web-canvas integrates electroacoustic sound material and a digitised monoprint of an abstract landscape in a custom web application developed in HTML, CSS and Javascript. The multi-dimensional mode of this practice brings together elements of sound visualisation, mapping and sound design for interactive media to develop a new method for a concurrent visual and sonic interaction in the online environment. Colour, texture and shape are reflected through the sonic qualities of each piece of the canvas, which relate to the natural elements of the landscape. Web-canvas acts as both an audiovisual compositional tool and a participatory online space. It encourages users to transform its visual and sonic components by replacing its pieces in a plethora of possible ways. My initial sonic interpretation of the visual landscape is shared with the users and can be played back, reset, erased and recreated according to their own aesthetic decisions. With this method Web-canvas explores a mode of interaction between images and sounds based on online user input.

https://www.eleniirapanourgia.com/art/webcanvas

Biography:

Dr. Eleni-Ira Panourgia is an artist working at the intersection of visual and sound arts. Eleni-Ira is a Teaching and Research Fellow at Gustave Eiffel University (former University of Paris Est Marne-la-Vallée) and Associate Fellow of the Higher Education Academy. She has recently completed a PhD in Art at the University of Edinburgh titled ‘Co-composition processes: form, structure and time across sculpture and sound’. Eleni-Ira’s work focuses on intersections of sculpture, spatial dimensions and sound in a responsive and interactive way in relation to materials, their processes and technologies. She explores the potential of such complex morphologies within artistic, design and social processes. Eleni-Ira’s work has been exhibited and presented internationally in museums, galleries, festivals and conferences in the UK, Ireland, France, Switzerland, Italy, Greece, Cyprus and India. Eleni-Ira is member of the Littératures, Savoirs et Arts (LISAA) Research Lab, the International Media Music and Sound Arts Network in Education (IMMSANE), the Research Centre for Creative-Relational Inquiry (CCRI), RAFT Research Group, Onassis Scholars’ Association, Greek Sculptors’ Association and the Chamber of Fine Arts of Greece. She is co-founder and managing editor of Airea: Arts and Interdisciplinary Research Journal.

Dr. Eleni-Ira Panourgia is an artist working at the intersection of visual and sound arts. Eleni-Ira is a Teaching and Research Fellow at Gustave Eiffel University (former University of Paris Est Marne-la-Vallée) and Associate Fellow of the Higher Education Academy. She has recently completed a PhD in Art at the University of Edinburgh titled ‘Co-composition processes: form, structure and time across sculpture and sound’. Eleni-Ira’s work focuses on intersections of sculpture, spatial dimensions and sound in a responsive and interactive way in relation to materials, their processes and technologies. She explores the potential of such complex morphologies within artistic, design and social processes. Eleni-Ira’s work has been exhibited and presented internationally in museums, galleries, festivals and conferences in the UK, Ireland, France, Switzerland, Italy, Greece, Cyprus and India. Eleni-Ira is member of the Littératures, Savoirs et Arts (LISAA) Research Lab, the International Media Music and Sound Arts Network in Education (IMMSANE), the Research Centre for Creative-Relational Inquiry (CCRI), RAFT Research Group, Onassis Scholars’ Association, Greek Sculptors’ Association and the Chamber of Fine Arts of Greece. She is co-founder and managing editor of Airea: Arts and Interdisciplinary Research Journal.

Barry Moon and Doug Nottingham – Performing Live Visuals with Eye-Tracking Technologies

pincushioned have been producing live visuals for their performances since they started in 2007. Their greatest challenge is being able to control video while performing music. The most useful solutions include: 1, creating generative visuals where only broader changes are enacted in performance, or 2, having sound produced in the same software as visuals. The first of these approaches was made possible by controlling parameters in the Touch Designer software, using the same MIDI keyboard used for music performance. For the second of these approaches, game-like 3D environments were navigated in the Unity software, with sounds attached to colliders and spaces within the environments. Recently, our video guru has returned to playing guitar in the duo, where his hands and feet are too heavily occupied by music performance to control visuals. To combat this, we have been experimenting with a Tobii Eye-Tracker 5 in Unity to navigate 3D environments in Unity. We will discuss some of our approaches.

Biographies:

pincushioned is the invention/fabrication of Arizona-based duo Barry Moon (Baz) and Doug Nottingham (Dug). Since 2007 they have been working from a remote/suburban desert bunker melding digital sounds and images with their analog counterparts – beating drums, destroying guitars, spinning dials, sliding faders, writing software, building instruments and projecting bizarre imagery. These garbage-pickers of music and art exploit anything/everything: Jay Z, Xenakis, or Rembrandt are no safer than Shostakovich, the Butthole Surfers, or Bill Viola from their synthesizing/thieving hands. They go beyond post-modernism and into pre-whatever, creating an abstract body of interactive millennial media art. Their ethos/nihilism is evidenced by a DIY/DUI search for “digital answers for unasked questions” or “D.A.F.U.Q”.

Liz K Miller – Exploring the Acoustic Forest through Visual Fine Art Practice

This paper presents ‘Forest Listening’: an evolving artwork that explores the audio-visual experience of listening in forests. I analyse the field recording of a forest rainstorm through visual diagramming and photographic cyanotype techniques, then I discuss the exhibition of the resulting artwork in multiple contrasting venues: a forest, a gallery and a conference setting.

I consider how listening to the sounds made by trees can reconnect humans to the forest, and how the combination of audio and visual can be used to enhance that connection. I use listening as a method for reengagement with the woodland environment and field recording to gather sounds of sylvan processes.

Through sound visualisation I explore and analyse the field recordings in order to discover and reveal the hidden and unnoticed sonic depths of the sylvan forest. The resulting multi- sensory audio-visual artworks are used to direct attention and provide audiences with alternative avenues of engagement with, and perspective of trees, providing a catalyst for further dialogue regarding the importance of forests in the fragile time of the Anthropocene.

I argue for multi-modal listening as a method for generating alternative perspectives that move towards considering the forest not as an ecosystem service (for the use and exploitation by humans) but as a complex living multiplicity of interconnected life forms and resonating, vibrant processes, worthy of attention, celebration and auditory focus.

Biography:

Liz K Miller (b. 1983, Hexham) is a London-based artist and researcher. Her audio-visual practice spans cartography and printmaking to field recording and collaborative sound art projects. She graduated from Edinburgh College of Art (BA), Camberwell College of Art (MA), and was a print fellow at the Royal Academy Schools (2013 to 2016). In 2018 she was awarded an AHRC TECHNE scholarship to undertake a practice-based PhD at the Royal College of Art in the School of Arts and Humanities. Her research considers how, in this current time of climate and ecological breakdown, how listening to the sounds made by trees can reconnect humans to the forest, and how the combination of audio and visual can be used to enhance that connection. Recent solo exhibitions include: ‘Forest Listening’ (2020) at Watts Gallery, Guildford, in partnership with Surrey Hills Arts; ‘The Circular Scores’ (2019) at Huddersfield Art Gallery for Huddersfield Contemporary Music Festival; and ‘Scordatura’ (2015) at Bearspace Gallery, London.

Liz K Miller (b. 1983, Hexham) is a London-based artist and researcher. Her audio-visual practice spans cartography and printmaking to field recording and collaborative sound art projects. She graduated from Edinburgh College of Art (BA), Camberwell College of Art (MA), and was a print fellow at the Royal Academy Schools (2013 to 2016). In 2018 she was awarded an AHRC TECHNE scholarship to undertake a practice-based PhD at the Royal College of Art in the School of Arts and Humanities. Her research considers how, in this current time of climate and ecological breakdown, how listening to the sounds made by trees can reconnect humans to the forest, and how the combination of audio and visual can be used to enhance that connection. Recent solo exhibitions include: ‘Forest Listening’ (2020) at Watts Gallery, Guildford, in partnership with Surrey Hills Arts; ‘The Circular Scores’ (2019) at Huddersfield Art Gallery for Huddersfield Contemporary Music Festival; and ‘Scordatura’ (2015) at Bearspace Gallery, London.

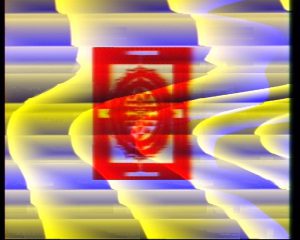

Yati Durant – Saundaryalahari: a search for a reciprocal audio-visual system

The Saundaryalahari project is a series of works based on an 8th Century Indian literary work in Sanskrit written by Adi Shankara called the Soundarya Lahari or Saundaryalahari (Sanskrit: सौन्दर्यलहरी) meaning “Waves Of Beauty”. The outputs of this project explore through music, sound and visuals the “non-verbal” creativity found in these ancient texts while utilising the structuralism of the spiritual/graphic formation of the Sricakra, which defines and arranges the verses from outside to inside. As part of this, the Saundaryalahari sound art “system” utilises signal modified electronics and interactive visuals to attempt to translate image into sound and back again – providing the opportunity for the music to have a “feed forward” loop to regenerate musical materials within a composition and interact directly with the performer or ensemble during a performance.

In this paper, I will explain the genesis and evolution of the current Saundaryalahari reciprocal audio-visual system and why going beyond an arbitrary representation of visuals to audio/music allows for more distinct co-compositional approaches in performance and creation. Considerations on research into synaesthesia, experimental animation and improvisation will be discussed along with an introduction to the most recent Saundaryalahari audio-visual sound art works by the author. The project is funded by a Creative Scotland – Sustaining Creative Research Grant with the goal of exploring how Sound Art works can play a role in potentially transcending inequities of excessive miscommunication (i.e. social media, fake news and other overly prolific verbal/text-based communication in media).

A blog into the current research strands of the Saundaryalahari project can be found here: http://www.yatidurant.com/saundaryalahari-blog.

Biography:

Yati Durant is an award-winning U.S. born composer of concert and film music, Professor, sound artist, trumpeter and conductor. He is a visiting Professor of Film Music Conducting at the Rovigo Conservatory of Music “Francesco Venezze”. He is the former Programme Director of MSc Composition for Screen at the University of Edinburgh (2010 – 2019). Since 2019, Yati is the Director of the International Media Music and Sound Arts Network in Education (IMMSANE) that held the 1st IMMSANE Zurich 2020 Congress at the Zurich University of the Arts in October 2020.

Yati Durant is an award-winning U.S. born composer of concert and film music, Professor, sound artist, trumpeter and conductor. He is a visiting Professor of Film Music Conducting at the Rovigo Conservatory of Music “Francesco Venezze”. He is the former Programme Director of MSc Composition for Screen at the University of Edinburgh (2010 – 2019). Since 2019, Yati is the Director of the International Media Music and Sound Arts Network in Education (IMMSANE) that held the 1st IMMSANE Zurich 2020 Congress at the Zurich University of the Arts in October 2020.

His compositions and film scores have received many prizes from International festivals, including a Panorama Prize at the 2008 Berlin International Film Festival for Erika Rabau – Der Puck von Berlin and a BAFA National Commerce Film Award nomination for the WDR/ARD documentary Ein Klavier geht um die Welt. He is a finalist of the 2009 Concorso Giovani Musicisti Europei in Aosta, Italy with his score to C. Chaplin’s The Vagabond. Recent highlights include his conducting of the Staatsorchester Braunschweig to his 2005 orchestral and electronics score for Battleship Potemkin (1925) at the opening of the Braunschweig International Film Festival and at the Murnau Film and Musikfest, Bielefeld in 2018, and the inclusion of his scores for restored films from the Jean Desmet Dream Factory collection at EYE Amsterdam in 2015. In the 1990’s, Yati lived in New York City, where he worked in TV and film, writing for HBO, Walt Disney Productions, Fox, US Army, Jif, Waterman Pens and many others.

In Europe, Yati has worked for many State broadcasters, including the ARD and WDR, and many of his compositions and film scores have been published on CD and DVD. He is a frequent contributor and jury member to the most important film music competitions and festivals in Europe, including Soundtrack Cologne, Festival International du Film d’Aubagne and FMF Krakow. He also has an international profile as a performer of jazz trumpet and electroacoustic music, and he has performed in Italy, Germany, Poland, Czech Republic, India, France, Brazil and in the United Kingdom.

International Media Music and Sound Arts Network in Education (IMMSANE): www.immsane.com

Personal website: www.yatidurant.com

Karel Doing – Meaning making beyond the human: biosemiotics and biophotographicity

The emergence of meaning is habitually placed within the human realm, in particular within the obscure entity that we call ‘mind’. The term is obscure as its material constitution is unknown. Still, we tend to disregard the gaping black hole that is left in our so called ‘rational’ conception of the world. Attributing a similar meaning making capacity to anything other than human is met with derision and ridicule. Music, poetry and abstract art are exceptions to the rule, providing a refuge where melody, rhythm and form are used to evoke embodied forms of presence and meaning beyond the strictly human realm. In my practice as an artist and filmmaker my starting point is a deliberate transposition of the prevalent anthropocentric worldview, inquiring the meaning making capacities of organic matter, chemical process and meteorological circumstances.

By working with the chemical potency of plants in conjunction with photochemical emulsion and the transformative power of sunlight, traces of this process can be captured in the form of still and moving images. The shape, size and reactivity of the leaves and petals act like musical notes, only hesitantly adjusted to their placement within a photographic or cinematic system. Through prolonged observation and interaction with these resilient and generous vegetal and meteorological agents, a form of mutual knowledge creation becomes possible.

Biography:

Karel Doing is an independent artist, filmmaker and researcher whose practice investigates the relationship between culture and nature by means of analogue and organic process, experiment and co-creation.

Karel Doing is an independent artist, filmmaker and researcher whose practice investigates the relationship between culture and nature by means of analogue and organic process, experiment and co-creation.

He studied Fine Arts at the Hogeschool voor de Kunsten, in Arnhem, the Netherlands, graduating in 1990. In the same year he co-founded Studio één, a DIY film laboratory. In 2001 he co-founded Filmbank, a foundation dedicated to the promotion and distribution of experimental film and video in the Netherlands.

In 2017, he received a PhD from the University of the Arts London. During his research he developed ‘phytography’ a technique that combines plants and photochemical emulsion. He has employed this technique to investigate how culture and meaning can be shared between the human and the vegetal realm. In his thesis he proposes new forms of humility, doubt and listening to be advanced in today’s overconfident and exploitative human culture.

His work has been shown worldwide at festivals, in cinemas, on stage and in galleries. In 2012 he received a FOCAL award for his film Liquidator. He regularly gives workshops in analogue film practice and is currently lecturer in contextual studies at Ravensbourne University London. He is presently based in Oxford.

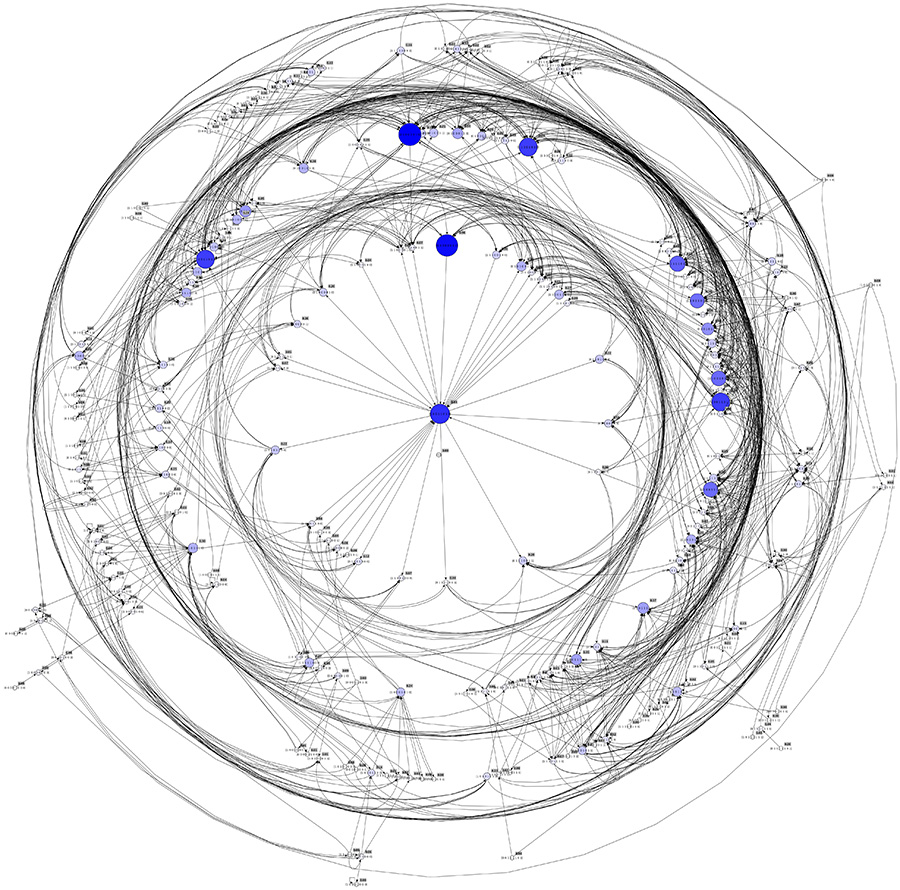

Rob MacKay – Following the Flight of the Monarchs

‘Following the Flight of the Monarchs’, is an interdisciplinary acoustic ecology project bringing together artists and scientists, connecting with ecosystems and communities along the migration routes of monarch butterflies as they travel the 3,000 mile journey between Mexico and Canada each year. The project, led by Rob Mackay at Newcastle University, connects with the international BIOM project led by Leah Barclay at The University of the Sunshine Coast, and SoundCamp to map the changing soundscapes of UNESCO Biosphere Reserves through art, science and technology.

Streamboxes are being installed along the monarch butterfly migration routes between Canada and Mexico. These livestream the soundscapes of these different ecosystems 24/7 via the Locus Sonus Soundmap (http://locusonus.org/soundmap/051/). The first of the boxes was successfully installed in the Cerro Pelón UNESCO monarch butterfly reserve in Mexico in 2018. The streams are being used for ecosystem monitoring as well as integrating into artworks which are raising awareness of the issues the monarchs face, who’s numbers have declined by nearly 90% over the past two decades.

Several artistic responses have been created so far: An immersive audiovisual installation; a live telematic audiovisual performance; several pre-recorded performances within the reserves; a 30 min radio programme for BBC Radio 3’s Between the Ears. Future installations include VR installations incorporating 360 video and ambisonic audio.

Biography:

Rob Mackay is a composer, sound artist and performer. He is currently a Senior Lecturer in Composition at Newcastle University.

Rob Mackay is a composer, sound artist and performer. He is currently a Senior Lecturer in Composition at Newcastle University.

Recent projects have moved towards a cross-disciplinary approach, soundscape ecology, audiovisual installation work, and human-computer interaction. His work has been performed in 18 countries (including broadcasts on BBC Radio 3, BBC Radio 1 and Radio France), and a number of his pieces have received international awards (Bourges, Hungarian Radio, La Muse en Circuit). He has held composer residencies at Slovak Radio (Bratislava), La Muse en Circuit (Paris), the Tyrone Guthrie Arts Centre (Ireland), Habitación del Ruido (Mexico City), CMMAS (Morelia), and the University of Virginia.

He was director of HEARO (Hull Electroacoustic Resonance Orchestra) and is editor for Interference, a journal of audio cultures. He is currently Chair of UKISC (UK and Ireland Soundscape Community). Several CDs and Vinyl are available including Rob’s Work. More information at https://robmackay.net.

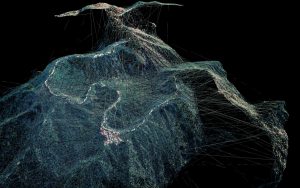

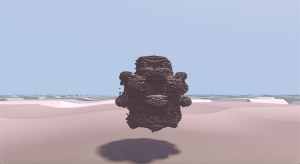

Dan Tapper – Expanding the point cloud: photogrammetry as sonic control

This is a paper detailing my recent experiments to expand photogrammetry techniques beyond 3 dimensional models into interlinked audio-visual networks. The work utilises point clouds, underlying the meshed objects to act as a sort of cloud based synthesis – similar to granular and concatenative methods of interacting with and moving through sound but navigated within a 3d space. The work fits into a larger body of work and research into how to engage with sound meaningfully as a creator within VR environments.

Biography:

Dan Tapper explores the sonic and visual properties of the unheard and invisible. From revealing electromagnetic sounds produced by the earth’s ionosphere, to exploring hidden micro worlds and creating imaginary nebulas made from code. His explorations use scientific methods alongside thought experiments resulting in rich sonic and visual worlds.

Presentations 2 – Sunday 13th Dec. 2020:

followed by Zoom Webinar Q&A:

Abstracts

Jon Weinel – Cyberdream VR: Visualising Rave Music in VR

This paper discusses Cyberdream, a prototype music visualisation for the Oculus Quest virtual reality (VR) headset. The project extends an earlier version of the project for the GearVR, which was exhibited at various events and exhibitions in 2019. The original Cyberdream provided a flight experience through various surrealistic landscapes, accompanied by a vaporwave and rave music soundtrack. In the updated 2020 version for the Oculus Quest, the journey is improved through the use of game audio middleware software, which allows the soundtrack to seamlessly transition through a series of original breakbeat hardcore and ambient techno tracks. In the new version, interactive VR controllers are used to provide audio-visual sound toys, which allow the user to paint with sound. This paper will discuss the construction of Cybedream with regards to macro and micro structural elements, which ultimately aims to provide a synaesthetic dreamscape with an electronic music soundtrack.

Biography:

Jon Weinel is a London-based artist, writer, and researcher whose main expertise is in electronic music and computer art. In 2012 Jon completed his AHRC-funded PhD in Music at Keele University regarding the use of altered states of consciousness as a basis for composing electroacoustic music. His electronic music, visual music compositions and virtual reality projects have been performed internationally. In 2018 his book Inner Sound: Altered States of Consciousness in Electronic Music and Audio-Visual Media was published by Oxford University Press. Jon has held various academic posts in the UK and Denmark. He is a Full Professional Member of the British Computer Society (MBCS), belongs to the Computer Arts Society specialist interest group, and is a co-chair and proceedings editor for the EVA London (Electronic Visualisation and the Arts) conference. Jon lectures at the University of Greenwich.

Andrew Knight Hill – Audiovisual Spaces: Spatiality, Experience and Potentiality in Audiovisual Composition

The spatial turn, which swept the wider humanities, has not significantly contributed to inform our understandings of sound and image relationships. Bringing together spatial approaches from critical theory and applying these to the re-evaluation of established concepts within electroacoustic music and audiovisual composition, this chapter seeks to build a novel framework for conceiving of sound and image media spatially. The goal is to negate readings of sound & image media as oppositional strands which entwine themselves around one another, and instead position them – within critical discourse – as complementary dimensions of a unified audiovisual space.

Standard readings of audiovisual media are almost ubiquitous in applying temporal conceptions, but these conventional readings act to negate the physical material of the work, striate the continuous flow of experience into abstract points of synchronisation and afford, therefore, distanced observations of the sounds and images engaged. Spatial interpretations offer new opportunities to understand and critically engage with audiovisual media as affective, embodied and material.

The perspectives within this research have potential to be applied to a wide range of sound and image media: from experimental audiovisual film and VR experiences, to sound design and narrative film soundtracks; benefitting not only academics and students, but also creative industry practitioners seeking new terminologies and frameworks with which they can contextualise and develop their practices. Audiovisual space positions potentiality and anticipation to replace notions of dissonance and counterpoint, enabling the reframing of terminologies from electroacoustic music such as gesture and texture in light of their common spatial properties.

Applying practice research perspectives and phenomenological analyses of the authors creative works GONG (2019) and VOID (2019), along with perspectives from embodied cognition, spatial approaches are demonstrated to embrace materiality, subjectivity, and embodied experience as fundamental elements within our understandings the audiovisual.

Biography:

Andrew Knight-Hill (1986) is a composer specialising in studio composed works both acousmatic (purely sound based) and audio-visual. His works have been performed extensively across the UK, in Europe and the US. From January 2021 he will be an AHRC Early Career Leadership Fellow, exploring concepts of Audiovisual Space, seeking to recontextualise the ways in which we understand creative processes when working with Sound-Image Media. He is also Co-Investigator on the AHRC Research Grant “A Sonic Palimpsest”, exploring how sound can provide new insights and access into history through the case study of the Historic Royal Naval Dockyard at Chatham, Kent, and core project member for the European Network “Reconfiguring the Landscape” led by Prof. Natasha Barett out of the Norwegian Academy of Music (Oslo) in partnership with IRCAM (Paris), IEM (Graz) and EMS (Stockholm) exploring site-specific approaches in ambisonic composition.

Andrew Knight-Hill (1986) is a composer specialising in studio composed works both acousmatic (purely sound based) and audio-visual. His works have been performed extensively across the UK, in Europe and the US. From January 2021 he will be an AHRC Early Career Leadership Fellow, exploring concepts of Audiovisual Space, seeking to recontextualise the ways in which we understand creative processes when working with Sound-Image Media. He is also Co-Investigator on the AHRC Research Grant “A Sonic Palimpsest”, exploring how sound can provide new insights and access into history through the case study of the Historic Royal Naval Dockyard at Chatham, Kent, and core project member for the European Network “Reconfiguring the Landscape” led by Prof. Natasha Barett out of the Norwegian Academy of Music (Oslo) in partnership with IRCAM (Paris), IEM (Graz) and EMS (Stockholm) exploring site-specific approaches in ambisonic composition.

He is Senior Lecturer in Sound Design and Music Technology at the University of Greenwich, convenor of the SOUND/IMAGE festival and director of the Loudspeaker Orchestra.

Bryan Dunphy – ImmersAV: A Toolkit for Immersive Audiovisual Composition

Virtual Reality (VR) can be described as an emergent medium in the context of abstract audiovisual art and visual music. The vast majority of material created for VR is related to computer games and 360 degree video. However, the fully immersive capabilities of contemporary VR systems also provide many exciting opportunities for the exploration of abstract audiovisual composition.

ImmersAV is an open source toolkit for immersive audiovisual composition. It was built around a specific approach to creating audiovisual work that utilises generative audio, raymarched visuals and interactive machine learning techniques. This talk will describe the toolkit and the underlying artistic principles that motivated its development. The guiding principle throughout the development of the toolkit is the idea that audio and visual material should be treated equally. This concept can be found throughout audiovisual art and visual music literature, and has been identified as a guiding principle of several notable practices. The concept of equality between audio and visual material is manifested within the ImmersAV toolkit as the ability to generate material within specific contexts and also to map data between these contexts in an omnidirectional manner.

The original work obj_#3, created using the ImmersAV toolkit, will be discussed as an example of the toolkit in practice. This piece explores concerns specific to the composition of abstract audiovisual material within an immersive environment. Following this discussion, directions for future development of the toolkit and areas of compositional interest will be explored.

Biography:

Bryan Dunphy is an audiovisual composer, musician and researcher interested in generative approaches to creating audiovisual art. His work explores the interaction of abstract visual shapes, textures and synthesised sounds. He is interested in exploring strategies for creating, mapping and controlling audiovisual material in real time. His background in music has motivated him to gain a better compositional understanding of the combination of audio and visuals. His recent work has explored the implications of immersive experiences on the established language of screen based audiovisual work. He is currently completing his PhD. in Arts and Computational Technology at Goldsmiths, University of London.

Bryan Dunphy is an audiovisual composer, musician and researcher interested in generative approaches to creating audiovisual art. His work explores the interaction of abstract visual shapes, textures and synthesised sounds. He is interested in exploring strategies for creating, mapping and controlling audiovisual material in real time. His background in music has motivated him to gain a better compositional understanding of the combination of audio and visuals. His recent work has explored the implications of immersive experiences on the established language of screen based audiovisual work. He is currently completing his PhD. in Arts and Computational Technology at Goldsmiths, University of London.

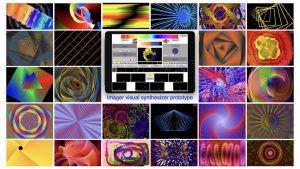

Fred Collopy – The Imager Project: Designing the Future of Painting

In his 1923 book The Future of Painting, Willard Huntington Wright argued that abstract art was really not about painting at all, but revealed the contours of a new art, the art of light. By 1930 Thomas Wilfred had coined the term Lumia to describe light structures defined around color, form, and motion. Since then, many artists, musicians, film-makers, and inventors have explored these ideas with an eye to creating visual works and instruments aimed at attaining something like the emotional expressiveness of music.

In this project, we will build a visual synthesizer that runs on iPads. It will be coded in Apple’s declarative language, SwiftUI and take advantage of their latest technologies including the reactive framework Combine, and the low-level graphics engine Metal. While designed to run as a stand-alone app, it will also support MIDI controllers and the use of resources created by other graphics applications.

The project is a vehicle for us to explore issues in modern software design and visual music and to see how it feels to build a substantial integrated app based in these technologies from the ground up.

In the closing pages of his prescient little book, Wright described all that would have to be achieved if the art of light is not to remain “inferior to the other arts” and concluded: “That day may not come for many decades—perhaps a century.” Well then, maybe we’re just about on schedule.

Biography:

Fred Collopy is Professor Emeritus of Design and Innovation at Case Western Reserve University. He received his PhD in Decision Sciences from the University of Pennsylvania in 1990. He has created numerous computer-based instruments, including the Imager visual synthesizer, an instrument for playing abstract visuals musically. He has written and spoken extensively on design and on visual music, including in Leonardo, Glimpse, and the Journal of Visual Programming Languages and at numerous conferences, including Expanded Animation 2020, the Center for Visual Music Symposium 2018, Seeing Sound 2016, Sonic Light 2003, SIGGRAPH 2000, the IEEE Symposium on Visual Languages 1999, and the International Symposium on Electronic Art 1998. In 2000 he produced the visual CD, Unauthorized Duets and he co-edited the book Managing as Designing, published by Stanford University Press in 2004. He was a visiting scientist at IBM’s Thomas J. Watson Research Center in 1998-99, at Cornell University’s Human Computer Interaction Lab in 2006 and at Signal Culture in 2017. Since 1998, his web site at RhythmicLight.com has been a reference in the field.

Fred Collopy is Professor Emeritus of Design and Innovation at Case Western Reserve University. He received his PhD in Decision Sciences from the University of Pennsylvania in 1990. He has created numerous computer-based instruments, including the Imager visual synthesizer, an instrument for playing abstract visuals musically. He has written and spoken extensively on design and on visual music, including in Leonardo, Glimpse, and the Journal of Visual Programming Languages and at numerous conferences, including Expanded Animation 2020, the Center for Visual Music Symposium 2018, Seeing Sound 2016, Sonic Light 2003, SIGGRAPH 2000, the IEEE Symposium on Visual Languages 1999, and the International Symposium on Electronic Art 1998. In 2000 he produced the visual CD, Unauthorized Duets and he co-edited the book Managing as Designing, published by Stanford University Press in 2004. He was a visiting scientist at IBM’s Thomas J. Watson Research Center in 1998-99, at Cornell University’s Human Computer Interaction Lab in 2006 and at Signal Culture in 2017. Since 1998, his web site at RhythmicLight.com has been a reference in the field.

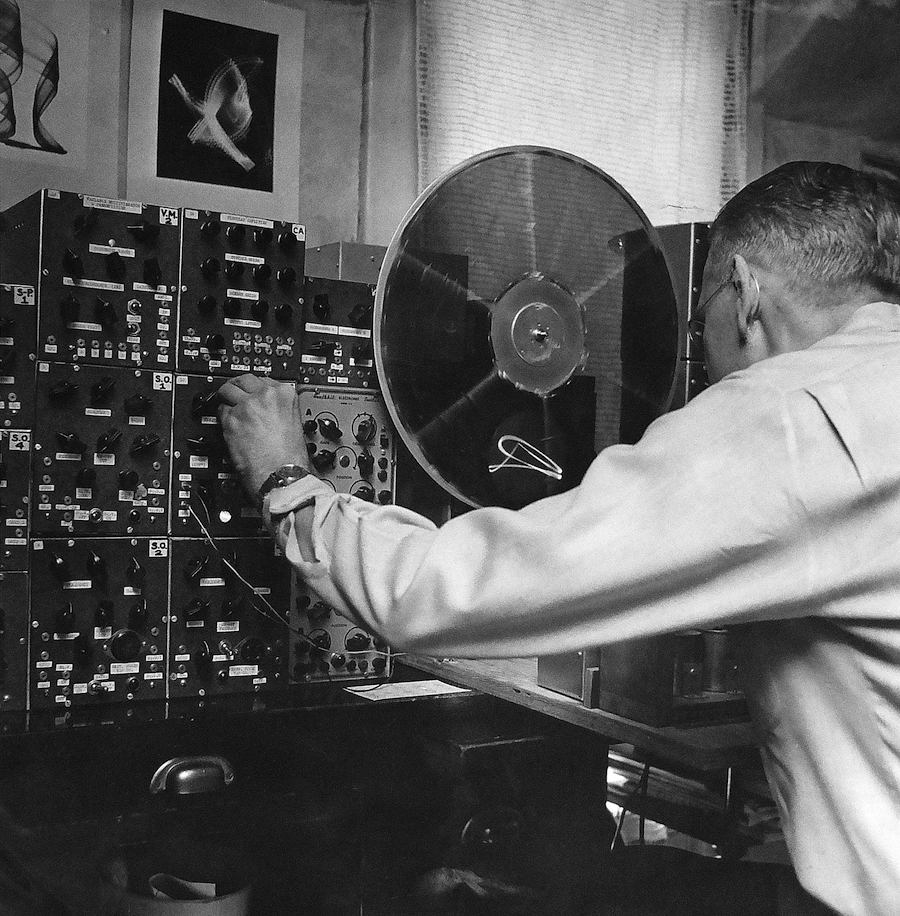

Skooby Laposky – The Modulated Path: Ben F. Laposky’s Pioneering Electronic Abstractions

Skooby will present highlights from research on his forthcoming book, The Modulated Path: Ben F. Laposky’s Pioneering Electronic Abstractions. His great uncle Ben F. Laposky is widely considered to be one of the earliest electronic visual artists. Laposky operated independently in rural post-war America photographing thousands of abstract electronic forms that were generated on his custom modular analog visual synthesizer.

Biography:

Biography:

Skooby Laposky is a sound designer, composer and musician based in Cambridge, Massachusetts. His work includes scores for television and film, product sound design, numerous dance 12”s and most recently large-scale public sound art. Laposky loves all sound-making devices and techniques, but has an affinity for the tangled affair of modular synthesis. He is currently working on a definitive book about his great uncle, Ben F. Laposky, widely considered to be a pioneer of electronic visual art.

Trent Kim – Lumia without Light

Lumia was an early to mid 20th century visual art form pioneered by Danish born American artist Thomas Wilfred. Historically, Lumia emerged in the tail end of colour music and declared the independence of light as an art form. Wilfred rejected any associations between colour music and Lumia, and used music as a metaphoric counterpart to consolidate the independence of light. Wilfred argued that Lumia exists in the architecture of darkness as opposed to silence of music, and its materiality reveals its exclusive structure of performance.

This paper proposes Lumia as a structure of performance and draws critical comparisons with other models of structure including Epic Theatre by Bertolt Brecht, Sculpture Musicale by Marcel Duchamp, Carnivalesque by Mikhail Bakhtin, and Theatre of the Absurd by Samuel Beckett.

Through comparative analysis between the above structures as well as a metaphoric adaptation of Lumia as a structure of sound, Trent Kim argues that Thomas Wilfred’s Lumia has suggested a new paradigm for storytelling where the world is revealed by the perspective of light. It offers potential for other performance art forms to construct their performance through the structure of Lumia.

Biography:

Biography:

Trent Kim is a South Korean British artist and educator based in Glasgow. His practice and research focuses on light, performance and new media technology and his artworks range from theatre lighting design, projection art to video art and Lumia. Currently, he is a lecturer in New Media Art at University of the West of Scotland and a PhD candidate in animation at the Royal College of Art.

Brian McKenna – VS010 – 1080pPants: Synchronization and Blanking Generator for Progressive Video Formats

This DIY video-synchronization signal-generator project seeks to fill some gaps in the availability of High-Definition video utilities for analogue video work. In addition to providing stable video synchronization for displays, HD video recorders, and analogue to digital converters, the circuit generates standard video blanking-signals and inserts them into RGB video component video channels – thus helping to solve common loss-of-signal and intensity-shift problems for video experimenters. The design is quite simple; it sidesteps the use of micro-controllers; it is cheap and easy to build from commonly available parts; it can be configured to a wide array of HD, SD, and PC display formats. The DIY PCB is stackable with pin-headers for multichannel use and allows for add-on circuitboards such as logic-gates for composite-sync output, amplifiers for compatibility with video modules, video signal generators and so on. The PCB size and layout allows for eurorack or stand-alone use.

A link to the webpage (in progress) is here: http://mediumrecords.com/vs0/vs010/vs010.html

Biography:

Biography:

Brian D. McKenna received a multi-disciplinary BFA in music & visual art from the University of Lethbridge and a MFA at the Sandberg Institute in Amsterdam. McKenna’s practice deals with technological environments and propaganda in relation to the tools we use and how we use them. Besides aesthetic considerations, his investigations highlight the importance of critical inquiry into technologies and the quasi-religious social structures they impart. His collaborative and solo works have been presented internationally at venues such as Atonal Festival in Berlin and The Ural Industrial Biennial in Ekaterinburg, Russia. Since 2006 McKenna has been designing and building analogue video synthesis devices and since 2014 researches media technology at the Sandberg Institute in Amsterdam.

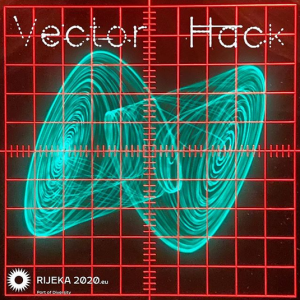

Derek Holzer, Chris King, Ivan Marušić Klif – Vector Hack

The second edition of the Vector Hack festival, dedicated to experimental vector graphics, took place from October 2nd to the 4th as part of Rijeka 2020 European Capital of Culture in Croatia, continued in Ljubljana in collaboration with Ljudmila on the October 7th and ended in Dubrovnik in collaboration with Art Radionica Lazareti on October 9th to 10th. The event was organized by Ivan Marušić Klif, Chris King, and Derek Holzer, and combined both remote and local activities as a response to the ongoing global pandemic.

The event featured researchers, developers, and performers from around the world as well as from the strong regional scene of Italy, Austria and Croatia. This unique combination of community meeting, conference, and international arts festival brought together practitioners in the field of laser and oscilloscope art for talks, workshops and live performances. It was a chance to experience audiovisual works which employ unusual, analog, and sometimes obsolete display technologies, and to bathe your eyes in a different kind of light.

Experimental vector graphics artists use lasers, analog oscilloscopes, old video game consoles, mechanical drawing machines, and other equipment to produce images using an X, Y, and Z coordinate system. They often feed these display devices with elaborate handmade electronic synthesizers, complex custom-made software, or other experimental instruments of their own design. The resultant images are often sinuous, intensely energetic, and radically different from what is possible on a digital screen!

Large parts of the program are available online at http://www.youtube.com/c/VectorHackFestival/. This presentation for Seeing Sound will give a brief overview of the various presentations and performances.

Biographies:

Derek Holzer (USA 1972) is an audiovisual artist, researcher, lecturer, and electronic instrument creator based in Stockholm. He has performed live, taught workshops and created scores of unique instruments and installations since 2002 across Europe, North and South America, and New Zealand. He is currently a PhD researcher in Sound and Music Computing at the KTH Royal Institute of Technology in Sweden, focusing on historically informed sound synthesis design.

Derek Holzer (USA 1972) is an audiovisual artist, researcher, lecturer, and electronic instrument creator based in Stockholm. He has performed live, taught workshops and created scores of unique instruments and installations since 2002 across Europe, North and South America, and New Zealand. He is currently a PhD researcher in Sound and Music Computing at the KTH Royal Institute of Technology in Sweden, focusing on historically informed sound synthesis design.

Christopher King is an artist, educator conservator primarily concerned with historic and contemporary electronic video and media art. His blog and online discussion group video circuits explores early abstract and synthetic image making practices such as video synthesis, experimental animation, visual music, cymatics and graphic scores. Chris regularly performs live visual music using electronic video and audio synthesis techniques. He also teaches workshops on these techniques and the history and context of electronic intermedia and visual music practice. He currently works as an assistant time based media conservator at Tate.

Christopher King is an artist, educator conservator primarily concerned with historic and contemporary electronic video and media art. His blog and online discussion group video circuits explores early abstract and synthetic image making practices such as video synthesis, experimental animation, visual music, cymatics and graphic scores. Chris regularly performs live visual music using electronic video and audio synthesis techniques. He also teaches workshops on these techniques and the history and context of electronic intermedia and visual music practice. He currently works as an assistant time based media conservator at Tate.

Ivan Marušić Klif was born in 1969 in Zagreb. He graduated from the School of Audio Engineering in Amsterdam in 1994. His field of interest includes fine arts (light installations and kinetic objects), music and sound for theatre and performance art. From 1996. He started working with computers – mostly in the field of multimedia programming, interactive video installations. He has exhibited and performed in Holland, Germany, Belgium, Czech Republic, Japan, USA, Austria, France, Denmark, Italy, Poland, Macedonia and Croatia.

Ivan Marušić Klif was born in 1969 in Zagreb. He graduated from the School of Audio Engineering in Amsterdam in 1994. His field of interest includes fine arts (light installations and kinetic objects), music and sound for theatre and performance art. From 1996. He started working with computers – mostly in the field of multimedia programming, interactive video installations. He has exhibited and performed in Holland, Germany, Belgium, Czech Republic, Japan, USA, Austria, France, Denmark, Italy, Poland, Macedonia and Croatia.