Maura McDonnell – Visual Music – The Colour Tone Analogy and Beyond

Visual music works now take place in many different performance environments with a myriad of technological tools and tracing its origins one can take a range of starting points as visual music has a long and interesting history, across disciplines, media, mediums, technologies. This paper will look at the origins of visual music from Sir Isaac Newtons colour-tone analogy used in his experiments with spectrum colour, through to the colour organs from the 18th to the 20th century. Beyond the colour tone analogy and towards a visual music, the paper will then go on to examine how visual music works are now more complex works that can encompass musical expression, emotion and drama. Not leaving behind the analogical thinking that takes place in many visual to music mappings in some works, the focus will be on how a visual music artist/collaborator focuses on creating meaningful connections between visual and music drawing out the combined music and visual as a whole event.

Visual music works now take place in many different performance environments with a myriad of technological tools and tracing its origins one can take a range of starting points as visual music has a long and interesting history, across disciplines, media, mediums, technologies. This paper will look at the origins of visual music from Sir Isaac Newtons colour-tone analogy used in his experiments with spectrum colour, through to the colour organs from the 18th to the 20th century. Beyond the colour tone analogy and towards a visual music, the paper will then go on to examine how visual music works are now more complex works that can encompass musical expression, emotion and drama. Not leaving behind the analogical thinking that takes place in many visual to music mappings in some works, the focus will be on how a visual music artist/collaborator focuses on creating meaningful connections between visual and music drawing out the combined music and visual as a whole event.

Biography:

Maura McDonnell is a PhD candidate on the Digital Arts and Humanities programme at Trinity College, Dublin, and based at the Arts Technology Research Lab in Trinity College and was awarded a Digital Humanities and Arts Fellowship to complete her PhD studies. Her PhD topic is on visual music and embodied cognition and involves an arts practice component. Maura is also a practicing visual music artist, and a part-time lecturer on the M.Phil. in Music and Media Technologies programme at Trinity College.

After discovering the power of sound and image by completing a number of experimental audio-visual works, Maura has since and continues to embark on an inquiry into the origins and existing practice in visual music and creates visual music works as a solo artist and also in collaboration with music composers. For this purpose, she keeps a blog and website at http://visualmusic.blogspot.com and http://www.soundingvisual.com

Maura’s visual music pieces have been shown around the world: China, Finland, Germany, Ireland, Singapore, US, UK. Her collaborative work Silk Chroma (2010) with the Irish Composer Linda Buckley and sound and acoustics experts Dermot Furlong and Gavin Kearney won an honourary visual music award at the Visual Music Award at Frankfurt, Germany in 2011.

Carol MacGillivray and Bruno Mathez – Screams of an acorn – The Diasynchronoscope and new ideas in the morphology of audiovision

The Diasynchronoscope is a new medium for creating screen-less animation using real concrete objects. Time is choreographed in space, taking time-based techniques from animation and converting them to the spatial. This novel technique has been called the ‘diasynchronic’ technique and the system; the ‘Diasynchronoscope’. The system draws on tropes from audiovisual research, animation and Gestalt grouping to create replicable, embodied perception of concrete objects moving precisely synchronized with sound, but unmediated by lens or screen. Through discussion of a recent exhibited artwork ‘Stylus’ we interrogate the Diasynchronoscope as an emergent new medium.

The Diasynchronoscope is a new medium for creating screen-less animation using real concrete objects. Time is choreographed in space, taking time-based techniques from animation and converting them to the spatial. This novel technique has been called the ‘diasynchronic’ technique and the system; the ‘Diasynchronoscope’. The system draws on tropes from audiovisual research, animation and Gestalt grouping to create replicable, embodied perception of concrete objects moving precisely synchronized with sound, but unmediated by lens or screen. Through discussion of a recent exhibited artwork ‘Stylus’ we interrogate the Diasynchronoscope as an emergent new medium.

We examine some key formalisms and boundaries of the medium and suggest that certain tropes inherent to creating a replicable embodied experience offer a unique insight to ideas of synthesizing sound and vision in the round. The focus of this paper is on how ideas in audiovisual research have informed the genesis of the Diasynchronoscope and, in turn, how formalisms developed in the Diasynchronoscope may inform current audiovisual practice.

Specifically we discuss how the Diasynchronoscope has eschewed the colour/music analogy common to many early audiovisual creations and instead adopted Dadaist Kurt Schwitters paradigm of ‘Merz’; bringing every conceivable material together for artistic purposes, with each individual material (both aural and concrete) contributing to the same effect in equal part. The paper also suggests that because the Diasynchronoscope experience is a truly embodied one, the medium extends the artist Paul Klee’s synaesthetic concepts of ‘polyphonic’ painting, where time and space become intrinsic to one another.

Biographies:

Carol MacGillivray and Bruno Mathez met at Goldsmiths University in 2011, and began an artistic collaboration using their mutually developed technique where concrete objects are animated through selective attention. These works not only combine their mutual interests in creating embodied, kinetic art, but also are a result of a unique marriage of skills: Carol is a sculptor and researcher, from a background of animation and film editing and spent 20 years working across documentary, drama, music videos, and commercials. Her practice-based PhD ‘Choreographing Time: Developing a system of Screen-less Animation’ chronicles the development of the Diasynchronoscope. Bruno is a French audiovisual artist living in London. He has created visuals for music concerts, operas, dance and theatre shows, exhibited audiovisual installations including the light-to-sound installation Photophonics (Royal Festival Hall) and is part of interactive audiovisual group; The Sancho Plan (Ars Electronica).

Michelle Lewis-King – Touching Sound: Pulse Project

This paper presentation explores Pulse Project (2011 – ), a performance series researching the relational interfaces between art, medicine, technology, self, other, the pre and post modern through using touch, graphic notation, drawing and algorithmic compositions to instrumentalise the relationship between artist and audience. Pulse “reading,” case history notation, live hand-drawn graphic notations of participants’ pulses and programming bespoke soundscape compositions are all used together as methods for exploring the relationship between touching, hearing and seeing sound.

This paper presentation explores Pulse Project (2011 – ), a performance series researching the relational interfaces between art, medicine, technology, self, other, the pre and post modern through using touch, graphic notation, drawing and algorithmic compositions to instrumentalise the relationship between artist and audience. Pulse “reading,” case history notation, live hand-drawn graphic notations of participants’ pulses and programming bespoke soundscape compositions are all used together as methods for exploring the relationship between touching, hearing and seeing sound.

Drawing upon my experience as a clinical acupuncturist (with training in biomedicine), I use intuitive touch together with traditional Chinese medical and musical theories and SuperCollider (an audio synthesis programming language) to compose bespoke visual scores and algorithmic soundscapes expressive of the interior aspects of an individual’s embodied being.

Each participant’s pulse is interpreted as an unique set of sound-wave images based on the lexicon of traditional Chinese pulse diagnostics (a complex set of 28+ waveform images corresponding to states of being) and also in accordance with traditional Chinese music theory. Clinical/somatic impressions are translated into drawings and graphic notations used to program each algorithmic soundscape. Graphic notations and sound files are given to each participant as an artwork and as a document of the encounter.

Pulse Project introduces a new method for touching, visualising and transposing inaudible sound by using ancient and pre-modern medical approaches to the body in order to reconsider contemporary art, science and technology practices.

Biography:

Michelle Lewis-King is an artist and lecturer exploring sound in relation to embodiment and was awarded a PhD studentship in Digital Performance by the Cultures of the Digital Economy Research Institute at Anglia Ruskin University in 2011. Michelle’s research investigates the contemporary convergence between science, art, touch and technology. Her creative practice draws upon her transdisciplinary training in the fields of fine art, performance, audio programming, Chinese Medicine, biomedicine and clinical practice. Michelle has had several papers published, the most recent being the current issue of the Journal of Sonic Studies and she has also given presentations and demonstrations at conferences in the U.S., Berlin, Copenhagen and the UK. Michelle has shown her work both nationally and internationally. Recent group shows include, ‘Digital Futures’ at the V&A Museum, ‘Artist’s Games’ at Spike Island, ‘Future Fluxus’ at Anglia Ruskin Gallery curated by Bronac Ferran and futurecity as part of the 50th anniversary of Fluxus, ‘Experimental Notations’ at The Royal Nonesuch Gallery in Oakland, CA, ‘Hot Summer Salon’ at the Oakland Underground Film Festival and ‘Rencontres Internationales’ at various locations in Paris, Madrid and Berlin.

Ireti Olowe – Real-Time Graphic Visualization of Multi-Track Sound

This body of research seeks to investigate new approaches to sound visualization using pre-mixed, independent streams of music. The simultaneous application of sound visualization across interdependent, separate streams of sound provides an exploded view into the visual dimension of the listening experience.

This body of research seeks to investigate new approaches to sound visualization using pre-mixed, independent streams of music. The simultaneous application of sound visualization across interdependent, separate streams of sound provides an exploded view into the visual dimension of the listening experience.

The existing practice of sound visualization for performance predominately uses a single track of mixed sound. Existing forms sound visualization for performance that have utilized multiple tracks of sound, used them to trigger environmental changes within the listening environment, to synthesize aural and visual aesthetics and to explore auditory graphing. This novel approach to visualizing multiple tracks of sound simultaneously presents an opportunity to investigate aural relationships between parallel sonic events visually and relationships between aesthetics and aural perception.

This research is concerned with examining music created with non-musical sounds and whose characteristics are exhibited through textures and transformations rather than notes, tones and chords. The music that meets this criteria is the diverse genre of Electroacoustics. In addition to exploring behavior that occurs within its spectral structure, this research will explore images of sound whose form is directly influenced from its analysis, generating shapes that embody its salient properties.

This paper will discuss the visualization of interdependent sonic relationships within music that looks to enhance the listing experience and advance the state of the art of real-time graphic visualization.

Biography:

Ireti Olowe is a researcher who is interested in the abstractions of common observances and the stochastic results of natural occurrences. She is interested in pushing the boundaries of perception and investigating its limits spatially and cognitively.

She earned a BS in Electrical Engineering from Northwestern University during which she concentrated on signal processing and semiconductors. She achieved an MS in Communications Design from Pratt Institute. Her masters thesis is based on the semiotics and language of light, dark and shadow. She studied Digital Media at Hyper Island in Stockholm, Sweden where she directed her interests toward technology and programming.

Joe Osmond – The Colour of Silence

There are few places that inspire silence: still fewer moments of performance that are truly silent – meditation and reflection have been subsumed by an overwhelming desire to fill any space with sound. But where does this leave an artist who explores sound and jazz improvisation and its relationship with abstract imagery? In researching Digital Harmony (Whitney 1980) am I as guilty as John Whitney who by seeking a true complementarity of music and visual art seems to challenge the concept of silence: if every note has a visual twin that can be simultaneously rendered to achieve digital harmony what colour or shape is silence?

There are few places that inspire silence: still fewer moments of performance that are truly silent – meditation and reflection have been subsumed by an overwhelming desire to fill any space with sound. But where does this leave an artist who explores sound and jazz improvisation and its relationship with abstract imagery? In researching Digital Harmony (Whitney 1980) am I as guilty as John Whitney who by seeking a true complementarity of music and visual art seems to challenge the concept of silence: if every note has a visual twin that can be simultaneously rendered to achieve digital harmony what colour or shape is silence?

In this presentation, I consider a number of compositional possibilities that may begin to define and recognise the art of silence. Referencing sources that include Oscar Peterson’s thoughts on hearing the spaces between the notes and Pierre Boulez’s concept of music as a counterpoint made up of sound and silence “The Colour of Silence” seeks to contextualise the individuality of “seeing sound”.

Informed by conversations that began with “Birdsong for Prisoners” (Osmond 2011) and practical explorations into immersive projections including “Psychedelic Starlings” (Osmond and Bicknell – Glastonbury 2012) and “Ribbons of Sound” (Osmond-Womad 2013) this presentation also draws on the harmonic theories of John Whitney and David Liebman. By asking ‘what do I see when I hear this sound?’ and ‘how do I find stillness in a multi-sensory world?’ “The Colour of Silence” arises from continuing research into “Abstract Animation and The Art of Sound”.

Biography:

Joe Osmond is an interactive artist, animator, poet and musician currently completing a practice based PhD at Birkbeck College, The University of London, in “Abstract Animation and the Art of Sound”. He has worked in 21 countries, engaging with disability groups to make the creative arts accessible to all. This work has included creating interactive learning strategies for Arabic teachers in Kuwait and working with the visually and hearing impaired on a number of EU Projects. He has regularly exhibited in London and Hampshire and continues to develop original sound compositions in the field of abstract animation.

Joe’s most recent compositions include: “Psychedelic Starlings”: An abstract animation rendered from original footage filmed on the Somerset Levels. Projected with live improvised jazz at Glastonbury Town Hall (7th April 2012) and subsequently shown at The Immersive Vision Theatre, University of Plymouth (16th February 2013).

“Birdsong for Prisoners”: An original composition created from a variety of sources including birdsong, improvised jazz and the creative use of piezo mics to record the rarely heard sounds of the human smile. (Presented and published at EVA London 2011.)

Tom Richards – Toward Mini-Oramics

Daphne Oram (1925 – 2003) was a proponent of drawn sound techniques, and the inventor of the Oramics Machine: an innovation (circa 1966) that bears comparison to both the RCA and ANS synthesisers. Following up from Dr Mick Grierson’s lecture at Seeing Sound 2011, this paper will outline briefly some new discoveries regarding Oram’s legacy, before taking a more detailed look at an unrealised project from the 1970s.

Daphne Oram (1925 – 2003) was a proponent of drawn sound techniques, and the inventor of the Oramics Machine: an innovation (circa 1966) that bears comparison to both the RCA and ANS synthesisers. Following up from Dr Mick Grierson’s lecture at Seeing Sound 2011, this paper will outline briefly some new discoveries regarding Oram’s legacy, before taking a more detailed look at an unrealised project from the 1970s.

Initial research has led to the tentative conclusion that the Oramics Machine, although remarkable conceptually, was difficult to work with, beset with technical problems and never truly finished. Realising that compromise was necessary if the commercialisation of Oramics was to be successful, Oram turned her thoughts to the production of a simpler version of her machine, to be marketed to composers and educators.

Oram’s plans for Mini-Oramics will be examined and contrasted with contemporaneous developments in music technology. It will then be argued that Mini-Oramics could have had an important impact, had it been launched in the early 1970s – when commercial music-sequencing techniques were often restrictive and lacking in nuance, with un-intuitive user interfaces (until the advent of MIDI and affordable computing). This historical potential is also being investigated in creative practice, where a hardware version of Mini-Oramics is underway. On completion, this prototype will be evaluated alongside its technological ‘competitors’. Hopefully this project will not only extend our understanding of Oramics technique, but will also help to illustrate the design challenges faced by Oram and her technical collaborators and peers.

Biography:

Tom Richards has been walking the line between Sonic Art, Sculpture and Music since graduating with an MA in Fine Art from Chelsea College of Art in 2004. He has exhibited and performed widely in the UK, as well as internationally in the US, Germany and Sweden. Selected exhibitions and live performances have taken place at Tate Britain, The Queen Elizabeth Hall, The Contemporary Art Society, Spike Island, Zabludowicz Collection, Cafe Oto, MK Gallery, and Resonance FM. His ‘Broken Patchbay’ EP was released in March 2012.

Richards has also worked as a technical consultant for many artists and galleries, specializing in sound, 16mm/35mm film, and bespoke electronics. He has been designing, building and performing his own electronic musical instruments since 2006, and this work led him to his current project: a collaborative doctoral award between Goldsmiths and the Science Museum, researching the life and work of Daphne Oram; electronic music pioneer and founder of the BBC Radiophonic Workshop.

Larry Cuba – Generative Animation, A Personal History

Next year will mark the 40th anniversary of the release of my first computer-animated film, First Fig, produced while I was a student at Calarts in 1974.

Next year will mark the 40th anniversary of the release of my first computer-animated film, First Fig, produced while I was a student at Calarts in 1974.

The computers at that time were huge mainframe systems in large institutions available only for data processing projects for scientific research or business operations. Computer time for art needed to be begged from a sympathetic administrator and if granted, was limited to the hours after midnight and on the weekends.

The systems were extremely crude by today’s standards, of course—less powerful than a smartphone with no authoring systems for creating applications. The rare system with graphics capabilities had black and white vector screens comparable to an Etch-a-Sketch toy. But the prospect of generating animated geometric forms choreographed via algebraic algorithms was irresistible. Perseverance was mustered; System limitations were accepted and endured. Over the years, as computer graphics technology developed and became more accessible, I moved from one computer to the next, as they became available, and created a small series of films.

This talk will survey the works, the different systems used to create them, and the influence the ever-changing technology had on the process of my experimental generative animation.

Biography:

Larry Cuba, a pioneer in computer art, produced his first computer-animated film in 1974. The following year, Cuba collaborated with John Whitney, Sr. programming the film, Arabesque.

Larry Cuba, a pioneer in computer art, produced his first computer-animated film in 1974. The following year, Cuba collaborated with John Whitney, Sr. programming the film, Arabesque.

Cuba’s subsequent computer-animated films, 3/78 (Objects and Transformations) , Two Space, and Calculated Movements, have been screened at film festivals throughout the world—including Los Angeles, Hiroshima, Zagreb and Bangkok—and have won numerous awards. Cuba’s been invited to present his work at conferences on computer graphics and art (such as Siggraph, ISEA, Ars Electronica, Art and Math Moscow, etc.) His films have been included in exhibitions at New York’s Museum of Modern Art, The Whitney Museum, The Hirshhorn Museum, The San Francisco Museum of Modern Art, The Art Institute of Chicago, The Amsterdam Filmmuseum and The Pompidou Center, Paris.

Cuba received fellowship grants from the American Film Institute and The National Endowment for the Arts, and was an artist-in-residence at the Center for Art and Media Technology Karlsruhe (ZKM). He has served on the juries for the Siggraph Electronic Theater, the Siggraph Art Exhibition, The Ann Arbor Film Festival, and Ars Electronica.

In 1994, he founded The iotaCenter, a non-profit organization dedicated to the art of abstract animation and visual music. More information can be found at www.well.com/user/cuba

Dr. Aimee Mollaghan – Between the Binary And Baroque: John Whitney’s Search for “a New Language for a New Art”

Through his pioneering work in the field of digital animation, filmmaker John Whitney arguably created a new language for audiovisual composition that has been picked up and built on by successive generations of animators, composers, and filmmakers, many of whom are unaware of the origins of his legacy. Writing in 1946, Whitney predicted, “Perhaps the abstract film can become the freest and the most significant art form of the cinema. But also, it will be the one most involved in machine technology, an art fundamentally related to the machine (1946: 144).” In the course of his career Whitney was continuously striving to create a greater integration between technology and the creative process in order to fully realise his ambition to create abstract films predicated on the idea of a mathematical harmony that underlies everything. By paying close attention to Whitney’s digital films, the culmination of his theories on mathematical harmony, this paper will investigate Whitney’s formulation of a new language for his new cinematic art. Further to this, it will examine how Whitney imbues his work with a metaphysical quality by virtue of his use of mathematical harmony as the foundation of his work, creating a connection between number, the cosmos, music and image and thus extending ideas of universal harmony beyond what even the Pythagoreans had envisaged so many centuries ago.

Biography:

Dr. Aimee Mollaghan is the BA with film studies co-ordinator at the Huston School of Film and Digital Media, N.U.I. Galway in Ireland. Her research interests include exploring conceptions of sound and soundscape across disciplinary boundaries. She is also interested in notions of psychogeography and soundscape in British and Irish film.

Dr. Aimee Mollaghan is the BA with film studies co-ordinator at the Huston School of Film and Digital Media, N.U.I. Galway in Ireland. Her research interests include exploring conceptions of sound and soundscape across disciplinary boundaries. She is also interested in notions of psychogeography and soundscape in British and Irish film.

Richard Stamp – The computer artist as media archaeologist: John Whitney’s ‘gadgeteering’

The abstract film animations produced by John Whitney, Sr. (and brother James) were accompanied by anticipations of new possibilities for the art form of cinema – a new ‘visual music’ intrinsically linked to the machine. Yet Whitney also presents his experimentation as that of an ‘archaeologist-engineer’ of image-making technologies, adapting a variety of discarded gadgets and military surplus – from 19th-century inventions such as Foucault pendulums and pantographs to WWII anti-aircraft fire control systems – in his pursuit of precision motion control mechanisms. This paper explores the continuities between this archaeological practice of repurposing discarded analog mechanisms and Whitney’s first film-making experiments with digital computers.

The abstract film animations produced by John Whitney, Sr. (and brother James) were accompanied by anticipations of new possibilities for the art form of cinema – a new ‘visual music’ intrinsically linked to the machine. Yet Whitney also presents his experimentation as that of an ‘archaeologist-engineer’ of image-making technologies, adapting a variety of discarded gadgets and military surplus – from 19th-century inventions such as Foucault pendulums and pantographs to WWII anti-aircraft fire control systems – in his pursuit of precision motion control mechanisms. This paper explores the continuities between this archaeological practice of repurposing discarded analog mechanisms and Whitney’s first film-making experiments with digital computers.

Biography:

Richard Stamp teaches film and literature at Bath Spa University. His research and teaching combine interests in moving image cultures and technologies (especially animation) with philosophy and cultural theory. His most recent publications are ‘Experiments in Motion Graphics – or, when John Whitney met Jack Citron and the IBM 2250’ (Animation Studies 2.0 – http://blog.animationstudies.org/?p=426); and ‘Jacques Rancière’s Animated Vertigo’, a chapter in Rancière and Film (Edinburgh UP, 2013). He is currently researching the animation mechanisms and films of John Whitney Sr.

Richard Stamp teaches film and literature at Bath Spa University. His research and teaching combine interests in moving image cultures and technologies (especially animation) with philosophy and cultural theory. His most recent publications are ‘Experiments in Motion Graphics – or, when John Whitney met Jack Citron and the IBM 2250’ (Animation Studies 2.0 – http://blog.animationstudies.org/?p=426); and ‘Jacques Rancière’s Animated Vertigo’, a chapter in Rancière and Film (Edinburgh UP, 2013). He is currently researching the animation mechanisms and films of John Whitney Sr.

Dr. Jules Rawlinson – Signature Sound: From Tag to Tone – Graffiti as Graphic Score

Asking the question “How do you write down sound?”, this paper will address design methodologies for indicating and interpreting typology, morphology and gestural structures in compositions for live electronics that build on analysis of sound-objects by Lasse Thoreson (Thoreson and Hedman 2007, 2009, 2010) and the author’s own PhD portfolio (Rawlinson, 2011), with a focus on new research and practice that incorporates elements of graffiti tags into graphic scores. Grounded in B-Boy culture, there’s a musicality to tagging with rhythms and repetitions, and importantly, tags seem spontaneous and improvised, yet crafted and cultivated.

Asking the question “How do you write down sound?”, this paper will address design methodologies for indicating and interpreting typology, morphology and gestural structures in compositions for live electronics that build on analysis of sound-objects by Lasse Thoreson (Thoreson and Hedman 2007, 2009, 2010) and the author’s own PhD portfolio (Rawlinson, 2011), with a focus on new research and practice that incorporates elements of graffiti tags into graphic scores. Grounded in B-Boy culture, there’s a musicality to tagging with rhythms and repetitions, and importantly, tags seem spontaneous and improvised, yet crafted and cultivated.

Graffiti tags are by turn angular, abstract, reductive, compressed, contained, expressive, expansive, iconic and symbolic, with lines that connect and organise, shifts in weight, arcing trajectories, ASCII like decoration, punctuation and texture, and embodied formal and filigree qualities that reflect the author’s own preferences for working with gestural interfaces in performance that include graphics tablets, 3D SpaceNavigator and digital turntables.

The paper references Smalley’s work on Indicative Fields and Indicative Networks (1992), Kandinsky’s writings on Point, Line and Plane (1926) and Roth’s research into Graffiti Analysis (Roth et al, 2004) Graffiti Taxonomy (Roth, 2005), and Graffiti Markup Language (.gml) (Roth et al, 2010), which fits into an established framework of mapping and archiving while offering opportunities for sequencing and sonification in environments like MaxMSP/Jitter.

Biography:

Jules Rawlinson works with sounds and images and performs with live electronics. He has completed commissions for sound installations and performances, reactive graphics, and online interactives for the BBC, New Media Scotland, Glenmorangie, Cybersonica, Future of Sound / Future of Light, and the recently revived Radiophonic Workshop among others.

He is a founding member of the LLEAPP network (Laboratory for Laptop and Electronic Audio Performance Practice), which has fostered an ongoing series of workshops and events at a number of UK institutions, and completed a PhD in Composition at the University of Edinburgh in 2011.

Jules is currently a Teaching Fellow in Digital Media at Edinburgh College of Art (The University of Edinburgh), with research interests comprising adaptable interfaces for multimedia performance and sound design, graphic notation, and creative coding.

Tom Mitchell – Designing audio interaction within the danceroom Spectroscopy quantum mechanics simulation and Hidden Fields performance

danceroom Spectroscopy (dS) is a multi-award winning art-science project exploring new languages and crossovers on the interface of computational physics and interactive art. Fusing rigorous quantum mechanics and high-performance computing, dS uses 3D imaging technology to interpret people as energy fields, allowing their movements to create ripples and waves within a virtual sea of energy.

danceroom Spectroscopy (dS) is a multi-award winning art-science project exploring new languages and crossovers on the interface of computational physics and interactive art. Fusing rigorous quantum mechanics and high-performance computing, dS uses 3D imaging technology to interpret people as energy fields, allowing their movements to create ripples and waves within a virtual sea of energy.

By transforming the real-time physics simulation in to graphics and soundscapes, dS invites people to use their movements to sculpt the fields in which they are embedded; it offers a unique and subtle glimpse into the invisible energy matrix and atomic world which forms the fabric of nature.

This presentation will discus the many channels of interaction that have been created between the underlying physics simulation and the graphical and sound producing elements of dS. This flexible interconnected network of information allows any of these elements to take lead in the generation of artistic content and has been used extensively within the acclaimed dance performance: Hidden Fields.

Biography:

Tom Mitchell is a Senior Lecturer in computer music at the University of the West of England in the Department of Computer Science and Creative Technologies. With a background in computer science and audio processing his research applies machine learning and artificial intelligence to support musical and artistic process. Much of his research is at the intersection of art and science and almost always supports artistic performances or installations.

Fernando Falci de Souza – Concepts and Creation of Rain: An audiovisual Granular Synthesis work

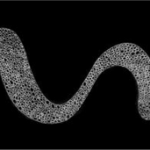

In this paper we present the theoretical concepts of the Audiovisual Atomic Correspondence, in which the Granular Synthesis of sound is poetically extended to the visual domain. The visual expression results in complex textures constructed with simple and abstract grains of image. We also describe the creative process of Rain, a Granular Visual Music composition for synthetic sound, acoustic bass and visuals, based on the proposed atomistic paradigm.

In this paper we present the theoretical concepts of the Audiovisual Atomic Correspondence, in which the Granular Synthesis of sound is poetically extended to the visual domain. The visual expression results in complex textures constructed with simple and abstract grains of image. We also describe the creative process of Rain, a Granular Visual Music composition for synthetic sound, acoustic bass and visuals, based on the proposed atomistic paradigm.

Biography:

Fernando Falci de Souza is a PhD Student in University of Campinas, Brazil. His current research deals with the creation of Visual Music works based on an atomistic paradigm. He is graduated in Computer Science and in Music Performance, specialization Acoustic Bass for Popular Music. His previous Master in Music research was about the use of high level computer models, e.g. Genetic Algorithms, in order to control granular synthesis. During internships at McGill University, he has also researched the design of Digital Musical Instruments and the construction of Gestural Controllers. Most of the digital tools used in his research and compositions are of his own development or creation, using JAVA, Processing, Arduino and sensors.

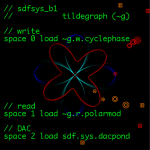

Samuel Freeman – the tildegraph concept in sdfsys

Having attended the first Seeing Sound symposium shortly before commencing my AHRC supported PhD at Huddersfield, a paper presentation is now proposed for the 2013 symposium that will summarise the trajectory and outcomes of my practice-led research from the past four years. The title of my (soon to be submitted) thesis is: Exploring visual representation of sound in computer music software through programming and composition.

Having attended the first Seeing Sound symposium shortly before commencing my AHRC supported PhD at Huddersfield, a paper presentation is now proposed for the 2013 symposium that will summarise the trajectory and outcomes of my practice-led research from the past four years. The title of my (soon to be submitted) thesis is: Exploring visual representation of sound in computer music software through programming and composition.

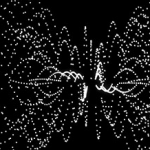

This presentation will focus on the ’tildegraph’ concept that was developed as part of my ‘sdfsys’ programmable soundmaking environment, which is a modular system created in MaxMSP/Jitter with a jit.gl based interface. An early version of sdfsys (sdf.sys_alpha) was demonstrated on the live coding DVD of the Computer Music Journal (2011, 35:4, pp.119-137). Examples of ‘sdfsys_b’ can be found online, and the current (_beta2) version of the software will be made public around the time of the symposium.

The tildegraph concept forms the primary method of soundmaking within sdfsys. This paper will describe how that concept emerged through a number of audiovisual software compositions, and how the thing named tildegraph went from being a specific manifestation of visual synthesis within a modular system to being something of an archetypal model for making sound by controlling shapes on screen where those shapes are direct representations of the same data that is then processed as sound.

Biography:

I make things to make noise with, and then make noises with them. These things are made both inside computers (where interactive systems are programmed mostly in Max/MSP/Jitter), and in the more physical realm (where electroacoustic contraptions are hacked together using recycled/re-contextualised components). With an experimental approach to performance practice I have regularly appeared with the Inclusive Improv, of which I was co-founder, and with the HELOpg laptop ensemble; I have been performing with laptops as instruments since 2005. I have, since 2009, been working on a PhD at the University of Huddersfield with supervision from Michael Clarke and Monty Adkins; this was supported by the Arts and Humanities Research Council.

I have various online presences, and have recently started to compile my works at www.ExperimentalMusicTechnology.co.uk.

Laura Steenberge and Jaroslaw Kapuscinski – Error in Metaphor: Image as Music in 6 Intermedia Compositions

The paper is a discussion of fixed media works that explore metaphors between the audio and visual sensory modalities. Poetic metaphors consist of concepts that overlap in meaning but form a logical error. In order to allow metaphorical relationships in intermedia, first, a deliberate balance of audio and video materials must be reached. This suggests individualized attenuation of vision’s dominance. Second, a meta-sensory common ground and its limits must be explored and engaged. The nature of this engagement in each of the works is the focus of our analysis, but it is the error in the metaphor which most strongly powers and defines each artist’s creative idiom.

Biography:

Laura Steenberge is a musician, thinker, science fan and shapeshifter. A generalist more than a specialist, she explores many forms of musical and intermedia work. Music pursuits include performance of both folk and art music as a contrabassist, viola da gambist, guitarist, pianist, singer, composer and songwriter. Other pursuits include studies of language and gesture, acoustics and psychoacoustics, origin stories, shape-based metaphors and animals. The results of these studies manifest as performance lectures, movement-based performances, drawings, videos and writings. Since 2011 she has been pursuing a doctorate in music composition at Stanford University. Previously she received an MFA in performance, composition and integrated media from the California Institute for the Arts and BAs in music and linguistics from the University of Southern California.

Laura Steenberge is a musician, thinker, science fan and shapeshifter. A generalist more than a specialist, she explores many forms of musical and intermedia work. Music pursuits include performance of both folk and art music as a contrabassist, viola da gambist, guitarist, pianist, singer, composer and songwriter. Other pursuits include studies of language and gesture, acoustics and psychoacoustics, origin stories, shape-based metaphors and animals. The results of these studies manifest as performance lectures, movement-based performances, drawings, videos and writings. Since 2011 she has been pursuing a doctorate in music composition at Stanford University. Previously she received an MFA in performance, composition and integrated media from the California Institute for the Arts and BAs in music and linguistics from the University of Southern California.

Iris Garrelfs – Traces in/of/with sound: an experience of audio-visual space

Traces in/of/with Sound is an audio-visual performance series, developed as part of the author’s PhD research into the creative processes in sound arts practice.

Traces in/of/with Sound is an audio-visual performance series, developed as part of the author’s PhD research into the creative processes in sound arts practice.

Whilst tangentially referring to visual music (see, for example, the work of Norman McLaren) the series investigates the expressive juxtaposition between a fixed film of drawing with improvised, processed voice, and how exploring this relationship affects understandings of music.

Several versions have taken place since 2010, each with a different audio-visual spatial configuration, from mono / single screen to 8-channel / 2 screen. The core visual material also changed during this time.

This presentation will follow the development of the piece over a 2-year time period from 2011-2013, focusing on the performer’s experience of audio-visual spatiality and its ingress into conceptual spatiality prompted by these experiences. Some strategies that may promote experiential spatial coherence or disunity in the experience of audio-visual space will be explored.

Biography:

Iris Garrelfs is an artist “generating animated dialogues between innate human expressiveness and the overt artifice of digital processing” as the Wire Magazine put it. Others have compared her work to artists such as Yoko Ono, Henri Chopin, Joan La Barbara, Meredith Monk and Arvo Part.

Iris Garrelfs is an artist “generating animated dialogues between innate human expressiveness and the overt artifice of digital processing” as the Wire Magazine put it. Others have compared her work to artists such as Yoko Ono, Henri Chopin, Joan La Barbara, Meredith Monk and Arvo Part.

Moulding complex sonic or multi-sensorial collages her work has featured in exhibitions, festivals and as part of residencies internationally, including Hack the Barbican (2013), Liverpool Biennial (2012), International Computer Music Conference (NY 2010), GSK Contemporary at the Royal Academy Of Arts (2008/09), Gaudeamus Live Electronics Festival, (Amsterdam 2007), and Visiones Sonoras (Mexico 2006). Collaboration have included Thomas Koner (Futurist Manifest), Scanner and more.

Now in its 16th year, Iris is one of the founding directors/curators of Sprawl, advocating experimental sound through live events and recordings, which has seen collaborations including the Tate Modern and the Goethe Institute, featuring internationally renowned artists such David Toop, Vladislav Delay, Pole and many more. She is currently an AHRC PhD research fellow at LCC in London where she also teaches on the BA Sonic Art. In a previous incarnation as photographer, Iris has been published by magazines such as The Wire, Mary Claire and others.

Ryo Ikeshiro – Audiovisualisation

The session will involve the presentation of two audiovisualisation works by the author based on a three-dimensional Mandelbox fractal: Composition: White Square, White Circle (2013) and Construction in Kneading (2013). The aesthetic implications of the approach of audiovisualisation and its roots in visual music will be discussed.

The session will involve the presentation of two audiovisualisation works by the author based on a three-dimensional Mandelbox fractal: Composition: White Square, White Circle (2013) and Construction in Kneading (2013). The aesthetic implications of the approach of audiovisualisation and its roots in visual music will be discussed.

Audiovisualisation is the simultaneous sonification and visualisation of the same data. Despite the relative ease with which this technique can be carried out, it still remains largely unexplored in comparison to the proliferation of A/V work in general. My claim is that audiovisualisation can serve a didactic purpose through allowing the underlying process behind abstract art to become understood. In addition, I believe that through this approach, the combination of sound and moving image may provide a possible solution to the problematic spectacle of a performance on any electronic device such as a laptop.

The analogue precursor to audiovisualisation is visual music, and in particular, the practice of directly drawing onto the film and the accompanying optical soundtrack. Such works can be associated with the Structural/Materialist film movement from the 1970s in Britain whose theory is applicable to the approach of audio-visualisation.

Within the framework of New Media and digitisation, generative moving image graphic scores and in particular live audiovisualisation take full advantage of the capabilities of “transcoding” and “variability”. Thus they are idiomatic uses of digital technology that are also relevant to the cultural context of our times.

Biography:

Ryo Ikeshiro is a UK-based Japanese artist. His works range from live A/V performances and interactive installations to generative music pieces and scored compositions. He has presented his works internationally at media art and music festivals, as well as at academic conferences. His works have been released on the digital platform s[edition] and he is featured in the Electronic Music volume of the Cambridge Introductions to Music series.

His practice-based research at Goldsmiths involves “live audiovisualisation”, where the same data and process produce both the audio and the visuals in real-time, without either one following the other – as seen in most VJ performances and visualisations. Emergent systems are used as basis for creating custom-made programs to generate sound and moving image. These could be compared to instruments or machines which are chaotic and unpredictable. In performance, he attempts to understand and control both of these components in a duet – or duel.

He curates exhibitions, screenings, and a series of events called A-B-A featuring performances, talks, and discussions. He has also published articles in journals such as Organised Sound.

Thor Magnusson – Tunings and microtonality with the Threnoscope

This paper presentation discusses the compositional ideas that underpin the development of the live coding improvisational system called Threnoscope. The system aims to remove, or at least de-emphasise, time from musical performance, using drones that slowly circulate around a spatial surround speaker setup. The visual representation of the system serves as a representational notation, giving indications of harmonic relationships between drones. The system has a strong capacity for microtonal composition by implementing support for the Huygens-Fokker Scala format and accompanying over 4000 microtonal scales and tunings.

This paper presentation discusses the compositional ideas that underpin the development of the live coding improvisational system called Threnoscope. The system aims to remove, or at least de-emphasise, time from musical performance, using drones that slowly circulate around a spatial surround speaker setup. The visual representation of the system serves as a representational notation, giving indications of harmonic relationships between drones. The system has a strong capacity for microtonal composition by implementing support for the Huygens-Fokker Scala format and accompanying over 4000 microtonal scales and tunings.

The paper also discusses the two modes of composing for the system: through live coding and through generative scores. The score system uses code as score elements, which can be visualised on a timeline. Finally, the paper discusses the topic of authorship in open source software systems in the musical domain.

Biography:

Thor Magnusson’s background in philosophy and electronic music informs prolific work in performance, research and teaching. His work focusses on the impact digital technologies have on musical creativity and practice, explored through software development, composition and performance. Thor’s research is underpinned by the philosophy of technology and cognitive science, exploring issues of embodiment and compositional constraints in digital musical systems. He is the co-founder of ixi audio (www.ixi-audio.net), and has developed audio software, systems of generative music composition, written computer music tutorials and created two musical live coding environments. As part of ixi, he has taught workshops in creative music coding and sound installations, and given presentations, performances and visiting lectures at diverse art institutions, conservatories, and universities internationally. Thor has presented his work and performed at various festivals and conferences, such as Transmediale, ISEA (International Symposium for Electronic Arts), Ertz, RE:New, Sonar, ICMC (International Computer Music Conference), NIME Conference (New Interfaces for Musical Expression), Impact festival, Soundwaves, Cybersonica, Ultrasound, and Pixelache. Thor lectures in music at the Music Department of University of Sussex.

Thor Magnusson’s background in philosophy and electronic music informs prolific work in performance, research and teaching. His work focusses on the impact digital technologies have on musical creativity and practice, explored through software development, composition and performance. Thor’s research is underpinned by the philosophy of technology and cognitive science, exploring issues of embodiment and compositional constraints in digital musical systems. He is the co-founder of ixi audio (www.ixi-audio.net), and has developed audio software, systems of generative music composition, written computer music tutorials and created two musical live coding environments. As part of ixi, he has taught workshops in creative music coding and sound installations, and given presentations, performances and visiting lectures at diverse art institutions, conservatories, and universities internationally. Thor has presented his work and performed at various festivals and conferences, such as Transmediale, ISEA (International Symposium for Electronic Arts), Ertz, RE:New, Sonar, ICMC (International Computer Music Conference), NIME Conference (New Interfaces for Musical Expression), Impact festival, Soundwaves, Cybersonica, Ultrasound, and Pixelache. Thor lectures in music at the Music Department of University of Sussex.

Lewis Sykes – The Augmented Tonoscope – Musical Interface and Composition

The Augmented Tonoscope is a Practice as Research PhD project working towards a deeper understanding of the interplay between sound and image in Visual Music.

While there are numerous examples of projects that deploy Cymatics, there are few that explore it as a means to create a form of visual or visible music – with the notable exception of John Telfer’s ‘Cymatic Music’. Telfer argues that a correspondence between musical note and cymatic pattern is more likely to be found in perfect interval, Just Intonation based music traditions – where the spans between notes are calculated based on small whole number ratios. His ‘Harmonicism’ theory led to development of the Lamdoma Matrix as a practical, creative resource for music making – extending the overtone and undertone progressions of the Pythagorean lamdoid by making these the axis of a 2D grid.

Struck by the similarity of this matrix to the form of the monome controller, Sykes has integrated both into a musical interface for his instrument, allowing him to access the tunings of the Lamdoma Matrix using the monome as physical ‘window’ – its buttons become the ‘keys’ of a 2D keyboard. He has started to explore the harmonic landscape of this tuning framework and worked out ways to record and compose using his own Nodal sequencer and more conventional Ableton Live DAW.

This paper presents Telfer’s key arguments and demonstrates Sykes’ efforts to extend and develop Telfers’ ideas through his own work integrating cymatic patterns and harmonic based virtual models.

Biography:

Lewis Sykes is an artist, musician and digital media producer/curator based in Manchester, UK. A veteran bass player of the underground dub-dance scene of the 90s he performed and recorded with Emperor Sly, Original Hi-Fi and Radical Dance Faction and was a partner in Zip Dog Records.

Lewis Sykes is an artist, musician and digital media producer/curator based in Manchester, UK. A veteran bass player of the underground dub-dance scene of the 90s he performed and recorded with Emperor Sly, Original Hi-Fi and Radical Dance Faction and was a partner in Zip Dog Records.

Honing an interest in mixed media through an MA in Hypermedia Studies at the University of Westminster in 2000 he continued to fuse music, visuals and technology through a series of creative collaborations – most notably as musician and performer with the progressive audiovisual collective The Sancho Plan (2005-2008) and currently as one-third of Monomatic – exploring sound and interaction through physical works that investigate rich musical traditions.

Director of Cybersonica, an annual celebration of music, sound art and technology (2002-11), he was also Coordinator of the independent digital arts agency Cybersalon (2002-2007), founding Artists-in-Residence at the Science Museum’s Dana Centre.

Lewis is in the final year of a Practice as Research PhD at MIRIAD, Manchester Met, exploring the aesthetics of sound and vibration.

Hali Santamas – Composing in the ‘new aesthetics’: atmosphere as compositional material in audio-photographic art

As a sound artist my work is concerned with the emotive interaction between photography and sound. In contemporary society we are conditioned to read music and image in a particular way, be it through album sleeves, promotional materials or multimedia art. I believe that the photography and images accompanying certain music releases is inseparable from the music itself: that one medium triggers an internal rendering of the other. These renderings result not just in surface associations, but a deeper connection based on memory and emotion. As such, in my own work, I use image and sound to engender a sense of atmosphere.

As a sound artist my work is concerned with the emotive interaction between photography and sound. In contemporary society we are conditioned to read music and image in a particular way, be it through album sleeves, promotional materials or multimedia art. I believe that the photography and images accompanying certain music releases is inseparable from the music itself: that one medium triggers an internal rendering of the other. These renderings result not just in surface associations, but a deeper connection based on memory and emotion. As such, in my own work, I use image and sound to engender a sense of atmosphere.

Böhme and Benjamin have both written extensively on atmosphere in an attempt to define it. Drawing on their work and Deleuze’s writing on perception I propose an audiovisual reading of atmosphere. Through discussion of this reading and with reference to my own audio-photographic work as examples, this paper will demonstrate how I have attempted to create atmosphere and shape the audience’s experience of each audio-photographic piece.

Biography:

Hali is a musician, photographer and sometimes an amateur graphic designer. He currently writes electronic music and takes photographs inspired by artists such as Mount Eerie, Rinko Kawauchi, Slowdive, Parts & Labor, AMM and Tim Hecker. In the past he has released music under the name Tread Softly on Deadpilot Records and as part of ambient pop duo Metonym.

He is currently studying for a PhD at the University of Huddersfield, researching atmosphere, memory and emotion in photography and music, supervised by Professor Monty Adkins.

Jean Piché – Pitfalls of Togetherness: Hyper-synchronicity as inhibitor for metaphor in visual music

This talk will explore and critique a practice becoming the norm in recent visual music, namely that of hyper-synchronous display of audio and visual information. Synchresis remains the most powerful integrator of audiovisuality but as production tools have evolved in the past few years, much is made of “parametric transduction” whereby sonic material is analyzed into time-variant vectors to be used as the driving force to generate and control forms, colors and movement. While seductive and often compelling, this approach will be shown to ultimately foster an impoverishment of discourse and lead to a tautological dead end where illustration overtakes expression as the final esthetic experience. Hyper-synchronicity, from Fishinger to McLaren, has driven much visual music in the past but its attainment may reveal itself unsatisfactory for an integrated esthetics of abstracted sound and image. Examples will be shown. Questions will be asked. Answers will be sought.

Biography:

Jean Piché is a composer, video artist, software designer and professor at the Université de Montréal. His creative output over the past 40 years has explored the more exotic edges of high technologies applied to music and moving images, including visual music, live electronics, fixed electronic media and performance. He has received numerous international awards and his work has been presented in Europe, Asia and North America. He now concentrates his practice on visual music, focusing on parallel compositional strategies for abstracted visuals and music, a new hybrid form he has helped define. He has been heavily involved in software design, notably with the program Cecilia and the TamTam suite on the celebrated One Laptop per Child computer from MIT. He presently directs the institut Arts, Cultures et Technologies (iACT), a new media research collective at the Université de Montréal.

Jean Piché is a composer, video artist, software designer and professor at the Université de Montréal. His creative output over the past 40 years has explored the more exotic edges of high technologies applied to music and moving images, including visual music, live electronics, fixed electronic media and performance. He has received numerous international awards and his work has been presented in Europe, Asia and North America. He now concentrates his practice on visual music, focusing on parallel compositional strategies for abstracted visuals and music, a new hybrid form he has helped define. He has been heavily involved in software design, notably with the program Cecilia and the TamTam suite on the celebrated One Laptop per Child computer from MIT. He presently directs the institut Arts, Cultures et Technologies (iACT), a new media research collective at the Université de Montréal.

Dr. Steve Bird – Composing With Images

Much of the research undertaken within the music community on the combination of sound and imagery, has related either to mainstream film-making, or has been rooted in the predominantly abstract world of Visual Music. However, Steve Bird utilises recognisable “real-world” imagery to produce multimedia works that function as compositions in a musical sense, whilst combining processes that draw from visual, cinematic, literary, musical and sonic traditions. Through an understanding of the different types of imagery that these seemingly unrelated media employ, he has formulated a working praxis which endeavours to facilitate a deeper understanding of the way that these image-forms interrelate within a multimedia work, defining this strategy as image-based composition.

Much of the research undertaken within the music community on the combination of sound and imagery, has related either to mainstream film-making, or has been rooted in the predominantly abstract world of Visual Music. However, Steve Bird utilises recognisable “real-world” imagery to produce multimedia works that function as compositions in a musical sense, whilst combining processes that draw from visual, cinematic, literary, musical and sonic traditions. Through an understanding of the different types of imagery that these seemingly unrelated media employ, he has formulated a working praxis which endeavours to facilitate a deeper understanding of the way that these image-forms interrelate within a multimedia work, defining this strategy as image-based composition.

The key to his method of working is an understanding of the audiovisual image as being present when two or more different image forms coincide to produce an overall image, that only exists because of this concurrence and which would be significantly altered by the removal of any one of its constituent parts. He explores the interaction of sonic, visual and intellectual imagery. The way that each of these component streams interact in synchronised harmony and counterpoint and the way by which each stream has influence over the others, he explains by processes referred to as: cross-genre image visualisation; digital collision and image transference. Concluding that the understanding of the nature of sonic, visual and intellectual image-interaction within a multimedia composition lies in the multi-sensory nature of this medium within which image-streams are in a constant state of flux.

Biography:

Having spent the first decade of his working life in the world of the theatre, Steve Bird then spent many years as a visual artist specialising in portraiture, and also as a blues musician, before returning to education as a mature student. In 2005 he graduated from Keele University with a first class honours degree in Music and Music Technology which was followed in 2006 with an MRes in Humanities, during which he specialised in audiovisual composition. He recentlycompleted a PhD in composition, again at Keele, for which he was funded by the Arts and Humanities Research Council.

Having spent the first decade of his working life in the world of the theatre, Steve Bird then spent many years as a visual artist specialising in portraiture, and also as a blues musician, before returning to education as a mature student. In 2005 he graduated from Keele University with a first class honours degree in Music and Music Technology which was followed in 2006 with an MRes in Humanities, during which he specialised in audiovisual composition. He recentlycompleted a PhD in composition, again at Keele, for which he was funded by the Arts and Humanities Research Council.

Working primarily with video, combining three-dimensional, electroacoustic soundtracks with cinematic narratives, Steve thinks of his works very much as moving paintings. He likes to deconstruct the world around him and then using imagery, both sonic and visual, to reassemble it in a process similar to that of a poet or a writer of short stories.

Andrew Connor – Felix the (Electroacoustic) Cat

Felix the Cat was created by Patrick Sullivan and Otto Messmer, making his first official appearance in “The Adventures of Felix” in 1919. An animated star of the silent film era, Felix was a very recognisable, anthropomorphised black and white cat.

Felix the Cat was created by Patrick Sullivan and Otto Messmer, making his first official appearance in “The Adventures of Felix” in 1919. An animated star of the silent film era, Felix was a very recognisable, anthropomorphised black and white cat.

While the cartoons follow a narrative structure, Felix had a trademark way of interacting with elements in his surroundings – he pulls on his skin like trousers, and when he can’t find his tail, a question mark pops up over his head, an accepted artifice in drawn cartoons. He then pulls this down to attach to himself as his tail.

Visual tricks such as these are the animation equivalents of the techniques used in electroacoustic composition. Using the freedom of drawn animation, ‘real’ elements are malleable, adaptable into whatever the animator needs. Felix’s detachable tail can become a walking stick, a telescope. In the same way, the electroacoustic composer can detach elements of a sound object and adapt them to fit within the demands of their composition.

Felix and his early contemporaries in animation had a freedom of expression that is mirrored in electroacoustic composition, a freedom that we can also explore in our modern audiovisual practice. By investigating and comparing Felix to compositional techniques, I will illustrate how the first animators and electroacoustic composers used the potential of these new artforms to experiment and play, and how we can also follow their examples in our own practice.

Biography:

Andrew Connor is an audiovisual composer based in Edinburgh He is currently undertaking a PhD in Creative Music Practice at the University of Edinburgh, under the supervision of Prof. Raymond MacDonald. His research and practice examines the interaction of electroacoustic music and abstract animation in audiovisual composition. He completed an MSc in Sound Design at Edinburgh in 2008, prior to which he worked for several years in the financial side of the film, TV and multimedia industries. He continues to be involved with the film industry in Scotland in parallel with his studies.

Andrew Connor is an audiovisual composer based in Edinburgh He is currently undertaking a PhD in Creative Music Practice at the University of Edinburgh, under the supervision of Prof. Raymond MacDonald. His research and practice examines the interaction of electroacoustic music and abstract animation in audiovisual composition. He completed an MSc in Sound Design at Edinburgh in 2008, prior to which he worked for several years in the financial side of the film, TV and multimedia industries. He continues to be involved with the film industry in Scotland in parallel with his studies.

Andrew’s work has been shown both in the UK and overseas, in Germany, Canada, the USA and Australia. His latest work, ‘Skitterling’ was selected for the International Computer Music Conference 2013 in Perth, Western Australia.

Rhys Davies – From the Laboratory of Hearing to Man with a Movie Camera – Sound as image in the work of Dziga Vertov.

Although Vertov’s 1930 documentary film ‘Enthusiasm’ was his first to employ location, post and sync sound recording, it is his last silent film “Man With a Movie Camera”, completed the previous year, which approaches the production of sound through the position and juxta-position of images, intended to access the viewer’s library of recollected aurality in order to signify those images with remembered sound.

Although Vertov’s 1930 documentary film ‘Enthusiasm’ was his first to employ location, post and sync sound recording, it is his last silent film “Man With a Movie Camera”, completed the previous year, which approaches the production of sound through the position and juxta-position of images, intended to access the viewer’s library of recollected aurality in order to signify those images with remembered sound.

This paper examines the creative development of Vertov, from his early work in literary montage, inspired by the work of the Futurists’ Luigi Russolo’s ‘Art of Noises’ manifesto (1913) and F.T. Marinetti’s ‘Parole in Libertà’, an excerpt of which is included in Russolo’s manifesto, to his attempt to create a ‘Laboratory of Hearing’ in 1916, before his return to a literary image based montage approach in documentary filmmaking.

Vertov’s failure to create a sound effects library can be seen as both a failure of acoustic audio capture technology of that period and a dissonance of perception between what was captured by the machine and what was subsequently recalled by the operator.

Vertov’s abandonment of this project marked the end of his experiments with the capture of sound aurally and the start of his attempt to capture sound through the use of the visual image – a significant element within the process he called “Film truth”.

The paper concludes with a ‘sound’ reading of the opening sequence of ‘Man with a Movie Camera’.

Biography:

Rhys Davies began his professional career as the Sound technician at the Wolsey Theatre in Ipswich in 1987. In 1993, he was appointed Sound technician and Visiting Tutor at Goldsmiths College Drama Department. For the next few years he taught theatre sound and designed scenographic sound for London fringe venues including The man in the Moon, The B.A.C., the Riverside Theatre, The Oval House, The Old Red lion, The Young Vic etc. In the late 1990s, Rhys began writing music for television, working on projects for the BBC, Disney, FIFA, Sony, Sky Sports, Seagrams and Motorola. In 2001 he composed the series music and designed the sound for The History of Football, a thirteen part documentary for Freemantle media. Since 2002, Rhys has taught Creative Sound Design practice at the Media Arts Department, Royal Holloway, University of London.

Rhys Davies began his professional career as the Sound technician at the Wolsey Theatre in Ipswich in 1987. In 1993, he was appointed Sound technician and Visiting Tutor at Goldsmiths College Drama Department. For the next few years he taught theatre sound and designed scenographic sound for London fringe venues including The man in the Moon, The B.A.C., the Riverside Theatre, The Oval House, The Old Red lion, The Young Vic etc. In the late 1990s, Rhys began writing music for television, working on projects for the BBC, Disney, FIFA, Sony, Sky Sports, Seagrams and Motorola. In 2001 he composed the series music and designed the sound for The History of Football, a thirteen part documentary for Freemantle media. Since 2002, Rhys has taught Creative Sound Design practice at the Media Arts Department, Royal Holloway, University of London.

Abstracts presented in chronological order of paper presentations.

All abstracts and biographies are as provided by the presenters.